Why Even Bother Compressing Prompts?

Large prompts are the new monoliths.

If you’ve ever tried to stuff a serious extraction spec, a few pages of dense tables or configs, and long-running conversation history into a single LLM call, you already know the pain:

- Context windows get cramped.

- Tokens get expensive.

- Latency creeps up.

- And the “just add more context” strategy quietly stops scaling.

Hieratic Prompt Compression is my attempt to push back on that—without spinning up yet another LLM just to shrink prompts. It’s being explored as an experimental feature for Tokuin, my CLI for token estimation and provider-aware load testing (GitHub).

The core question of this post:

Can we build a deterministic, structure-aware compression layer that shrinks prompts by 50–80% while preserving downstream task fidelity?

And maybe more importantly: is it worth doing, or is this just an over-engineered curiosity?

This is not a feature announcement. It’s a proposal in public, and I’d like your help pressure-testing it.

Why “Hieratic”?

The name “Hieratic” comes from the hieratic script used in ancient Egypt: a compact, scribal shorthand for hieroglyphs that preserved meaning while being much faster to write.

That’s roughly the spirit here:

- We want a compact, structured shorthand for long prompts.

- We care about preserving the semantic load, not the surface form.

- We want something a “scribal” system can produce deterministically, instead of asking another model to improvise a summary.

Hieratic is meant to be that shorthand: a structured, compressed representation of your original prompt that an LLM can still “read” effectively.

What I’m Proposing (in Plain Terms)

The rough sketch is:

A non-LLM, structure-aware compression pipeline that:

- treats instructions and structured documents differently,

- aggressively removes boilerplate,

- reuses repeated snippets via a context library,

- and supports incremental compression over long histories.

More concretely:

-

Extractive, not generative

We’re not asking another model to “summarize” the prompt. We’re selectively dropping low-value text and keeping the high-signal bits intact. -

Structure-aware

JSON, HTML tables, and BNF-like grammars are treated as verbatim document blocks. Only the natural language instructions around them get compressed. -

Library-driven boilerplate removal

Repeated extraction instructions, legal boilerplate, or scaffolding can be recognized and replaced by compact references using a reusable context library. -

Incremental compression

For long-running flows, only the newly dropped parts of the conversation get compressed into anchored summaries. We don’t re-summarize the entire history on every call.

The output is a “Hieratic” prompt: a compact, structured representation that the application can expand or feed directly into an LLM.

Why This Might Be Worth Doing

From a product and engineering standpoint, a feature like this could unlock some real benefits:

-

Token savings at scale

If you’re sending the same extraction spec or long policy docs over and over, shaving 50–80% of that cost starts to matter. -

Longer, richer prompts

A smaller prompt budget per call means:- More examples,

- More “why this matters” context,

- More system-level constraints, without immediately slamming into context window limits.

-

Latency and stability

Fewer tokens → lower latency, more predictable runtime, fewer timeouts and retries. -

Determinism & debuggability

Compression is rule-based:- Same input → same compressed output.

- Easy to diff and inspect what was kept vs dropped.

- Failure modes can be reasoned about and tested explicitly.

-

Better fit for structured tasks

Many real-world prompts mix instructions with structured data (tables, JSON, etc.). A structure-aware compressor is a more natural fit than a generic summarizer.

If this works even moderately well, Hieratic could become a core building block for workflows where prompts—not models—are the main bottleneck.

Why This Might Be a Terrible Idea

Being honest about the risks:

-

We silently destroy task fidelity

- The dangerous failure mode is not “the model throws an error”, it’s “the model quietly stops extracting certain fields.”

- Under aggressive compression, subtle but important constraints and corner cases can just, disappear.

- Research like 500xCompressor reports retaining ~62–73% of performance under heavy compression; that may be fine for some tasks, terrifying for others.

-

We overfit to a narrow prompt shape

- The early design assumes prompts that look like:

- a long instruction block, plus

- a structured document (tables, configs, schemas, etc.).

- If your prompts are mostly conversational, mostly code, or heavily multimodal, these strategies may not transfer well.

- The early design assumes prompts that look like:

-

Evaluation is non-trivial

- To take this seriously, we’ll need:

- a proper “before vs after compression” benchmark,

- real extraction and reasoning tasks, not toy examples,

- and metrics beyond “tokens saved” (precision/recall on fields, calibration of failure modes, etc.).

- To take this seriously, we’ll need:

If we’re not disciplined, we’ll end up with a fancy token counter that quietly degrades the very tasks this was supposed to help with.

A Bit of Research Backing

This idea borrows from existing work rather than inventing everything from scratch:

-

500xCompressor: Generalized Prompt Compression for Large Language Models

Shows that prompts are highly compressible (6×–480×) by learning a compressed “language” while retaining 62–73% of task performance. Key takeaways:- Prompts contain a lot of low-information redundancy.

- You can compress aggressively if you protect information-bearing tokens.

→ 500xCompressor: Generalized Prompt Compression for Large Language Models

-

NAACL 2025 survey on prompt compression

Surveys extractive vs. abstractive compression and token-pruning strategies, emphasizing:- trade-offs between compression ratio and task fidelity,

- the importance of preserving structure for technical prompts.

→ NAACL 2025 prompt compression survey (PDF)

-

Factory.ai – “Compressing Context”

Describes a production-ready strategy for incremental, anchored summaries:- maintain a persistent conversation state,

- compress only the span that’s about to fall out of context,

- avoid repeatedly re-summarizing old history.

→ Factory.ai: “Compressing Context”

Where Hieratic diverges is in deliberately not adding another model to the loop. The goal is a deterministic, rule-based compressor that plays nicely with structured prompts and long-running pipelines.

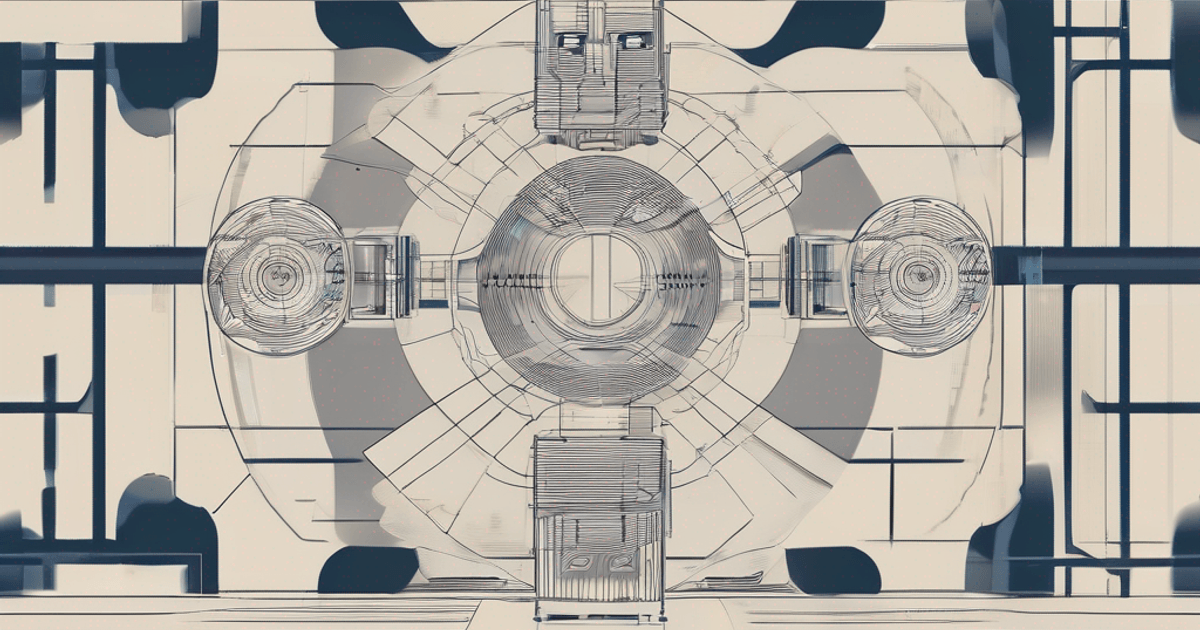

Rough Shape of the System

Here’s the high-level architecture I’m exploring.

1. Split instructions vs document

Before we drop any tokens:

- Instruction block: natural-language instructions and commentary.

- Document block: structured tables, JSON payloads, grammars, etc.

Only the instruction block is compressed.

Document blocks are treated as verbatim and left untouched.

2. Pattern extraction & context library (optional)

Given a corpus of prompts, a pattern extractor:

- scans for frequently repeated fragments (boilerplate instructions, shared scaffolding),

- builds a reusable context library,

- allows future prompts to reference those snippets instead of inlining them.

This is similar in spirit to a learned codebook (as in research like 500xCompressor), but done in a deterministic, human-auditable way.

3. Extractive compression over instruction blocks

For instruction text, the compressor:

- Sets a token budget based on a compression level (

Light,Medium,Aggressive) or explicit target ratio. - Segments text using structure-aware heuristics:

- headings (

#,##), - “Definition:”, “Location:”, “Response Format:” markers,

- parameter blocks (

Extracted_Value,Doc_Page_Number, etc.).

- headings (

- Scores segments such that:

- hard constraints and response formats are hard-kept,

- explanatory text and examples are more compressible.

- Implements a greedy selection: keep highest-scoring segments until the budget is spent.

The intent is to preserve the “spine” of the instructions while trimming repetition and soft context.

4. Encode as a Hieratic prompt

The compressed result is organized into a Hieratic structure, with sections like:

@ROLE– who the model is supposed to be,@TASK– what it’s supposed to do,@FOCUS– which fields / behaviors matter most,@EXAMPLES– optionally compressed or referenced,@RECENT– most recent interaction span,@ANCHOR[...]– anchored summaries of older context.

It’s essentially a compact, structured representation of your original prompt.

5. Incremental mode for long histories

In incremental mode, the compressor:

- loads a previous compression state,

- identifies the “oldest” span that’s about to be evicted from context,

- compresses that span into a new

@ANCHOR[...], - keeps the most recent N tokens uncompressed.

Over time, you get a stack of anchors plus a fresh @RECENT tail, keeping context current without growing linearly with the full history.

What I’d Love Feedback On

This is the experimental part. I’d love to hear from people who:

- run LLMs in production with big prompts,

- have tried prompt compression in anger,

- or have opinions on where this kind of feature should live in a system like Tokuin.

A few specific questions:

-

Evaluation

- How would you design a real benchmark for this?

- Which tasks / datasets would you use to prove that we’re not quietly breaking critical behaviors?

-

Failure modes

- Where have compression strategies bitten you before?

- Are there patterns or structures you’d mark as “never compress this”?

-

Integration into a stack like Tokuin

- At what layer would you expect to see a feature like this?

- Right before the LLM call?

- As part of a prompt-building pipeline?

- As a standalone preprocessor with its own cache?

- At what layer would you expect to see a feature like this?

-

Alternatives & trade-offs

- Would you lean towards learned compressors instead?

- Are there simpler heuristics that get most of the benefit without this level of complexity?

-

Product shaping

- Should this live as:

- a first-class Tokuin feature,

- an “experimental / power user” option,

- or a separate library that Tokuin can plug into?

- Should this live as:

If you have opinions—positive or negative—I’d genuinely appreciate hearing them.

How This Fits Into Tokuin (Right Now)

As of today, Hieratic Prompt Compression is experimental and aspirational:

- It’s being explored as a potential feature for Tokuin, not a locked-in roadmap item.

- Any implementation will start as:

- an opt-in, feature-flagged module,

- with clear disclaimers about the risks,

- and tooling to inspect exactly what was compressed.

Long term, if the idea survives scrutiny, I could imagine:

- per-project compression profiles,

- UI/CLI tools for inspecting “before vs after” prompts,

- and tighter integration with context management and retrieval strategies.

But we’re not there yet. For now, I’m trying to answer a simpler question:

Can a Hieratic-style, rule-based compressor earn its place inside Tokuin, or should we keep prompts uncompressed and invest elsewhere?

If you’ve navigated similar trade-offs—or you’re just curious and want to poke holes in this idea—I’d love to hear from you.

References

-

500xCompressor: Generalized Prompt Compression for Large Language Models

https://arxiv.org/abs/2408.03094 -

NAACL 2025 Prompt Compression Survey

https://aclanthology.org/2025.naacl-long.368.pdf -

Factory.ai – “Compressing Context”

https://factory.ai/news/compressing-context